The rapid progress of large language models (LLMs) has catalyzed the emergence of multimodal large language models (MLLMs) that unify visual understanding and image generation within a single framework. However, most existing MLLMs rely on autoregressive (AR) architectures, which impose inherent limitations on future development, such as the raster-scan order in image generation and restricted causal context modeling. In this work, we challenge the dominance of AR-based approaches by introducing

FUDOKI, a unified multimodal model based on discrete flow matching, as an alternative to conventional AR paradigms. By leveraging metric-induced probability paths with kinetic optimal velocities, our framework goes beyond the previous masking-based corruption process, enabling iterative refinement and richer bidirectional context integration during generation. To mitigate the high cost of training from scratch, we initialize Fudoki from pre-trained AR-based MLLMs and adaptively transition to the discrete flow matching paradigm. Experimental results show that Fudoki achieves performance comparable to state-of-the-art AR-based MLLMs across both visual understanding and image generation tasks, highlighting its potential as a foundation for next-generation unified multimodal models.

FUDOKI, a unified multimodal model based on discrete flow matching, as an alternative to conventional AR paradigms. By leveraging metric-induced probability paths with kinetic optimal velocities, our framework goes beyond the previous masking-based corruption process, enabling iterative refinement and richer bidirectional context integration during generation. To mitigate the high cost of training from scratch, we initialize Fudoki from pre-trained AR-based MLLMs and adaptively transition to the discrete flow matching paradigm. Experimental results show that Fudoki achieves performance comparable to state-of-the-art AR-based MLLMs across both visual understanding and image generation tasks, highlighting its potential as a foundation for next-generation unified multimodal models.

Discrete flow matching denoises step by step, transforming noise into vivid images.

Tokens can be iteratively revised—even those previously changed are updated again (red border), while the final answer is marked with a yellow dashed border, illustrating flexible step-by-step reasoning.

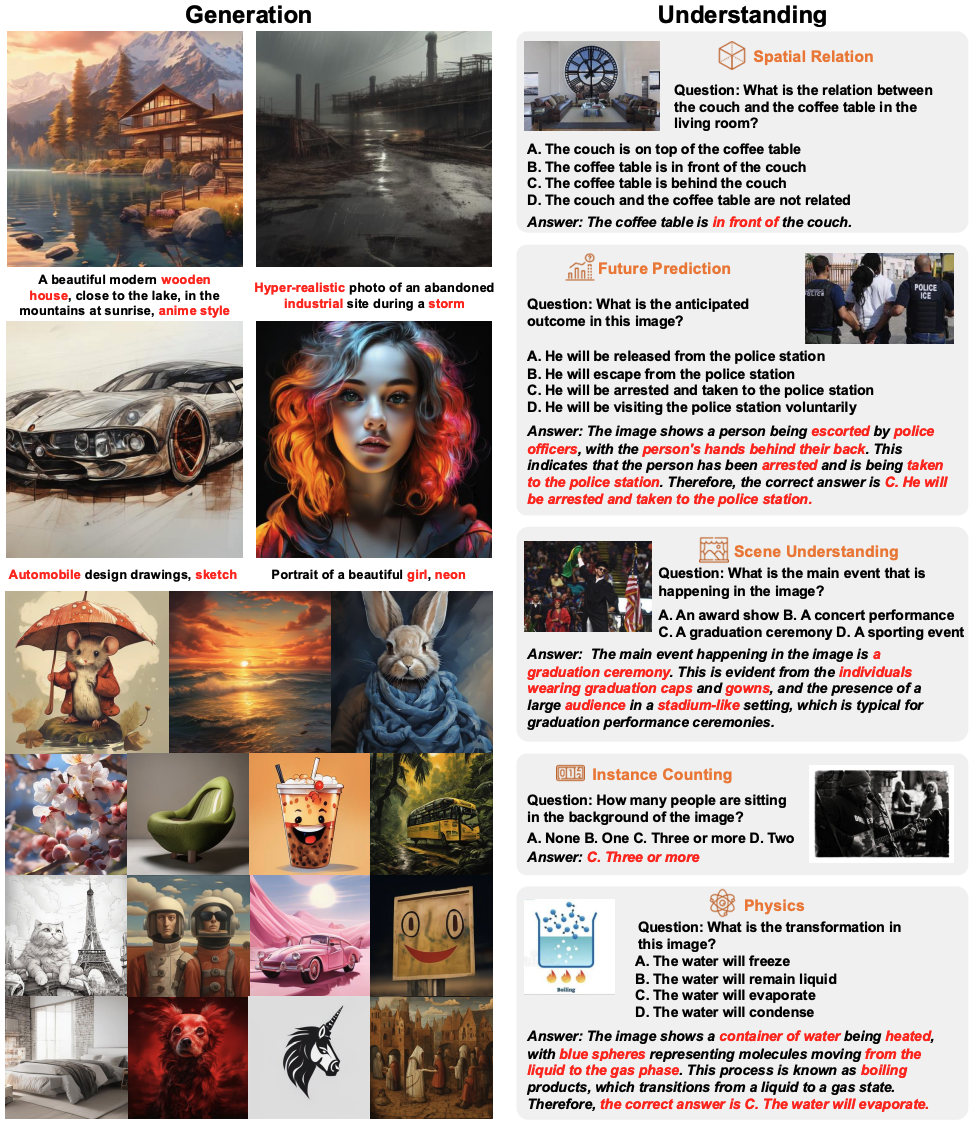

Qualitative Results of Visual Generation and Understanding Capabilities of FUDOKI. Fudoki is designed based on the framework of discrete flow matching for both visual and textual modalities, capable of performing understanding and generation simultaneously under one unified paradigm.

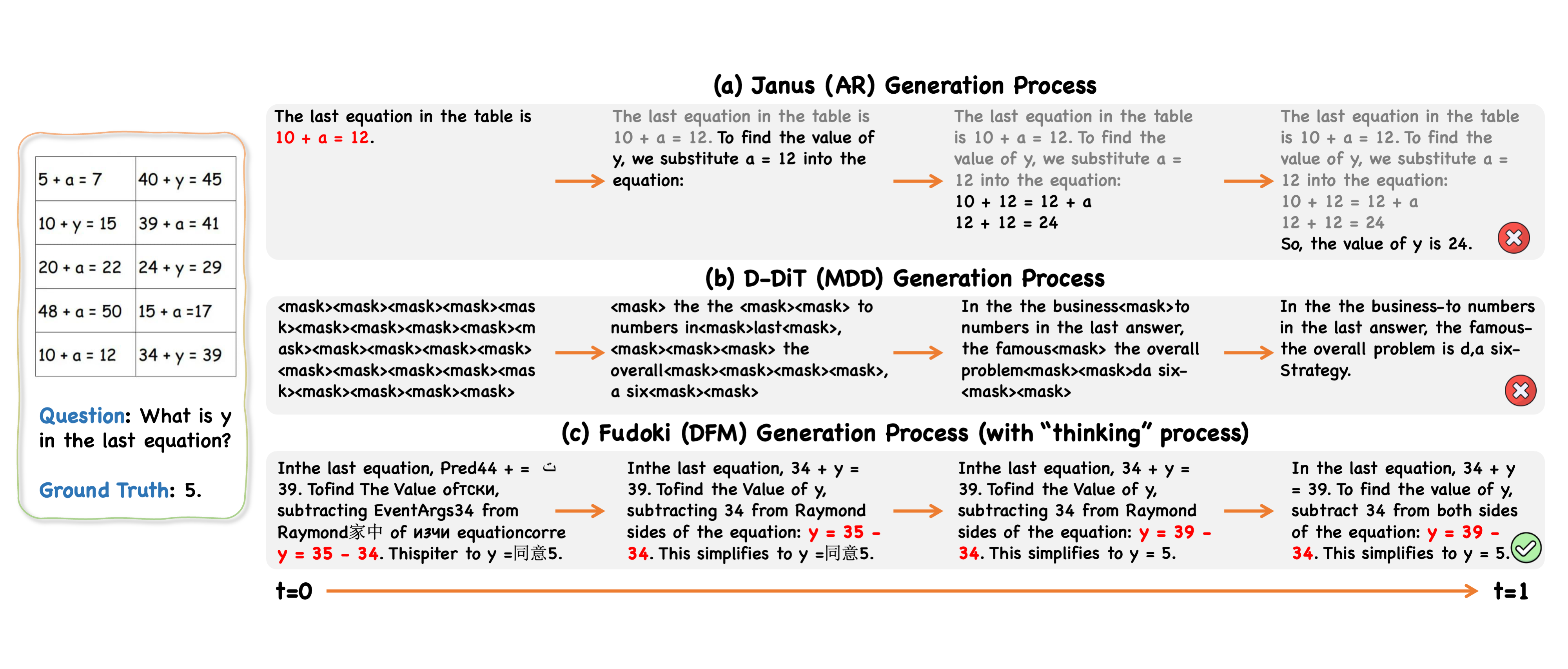

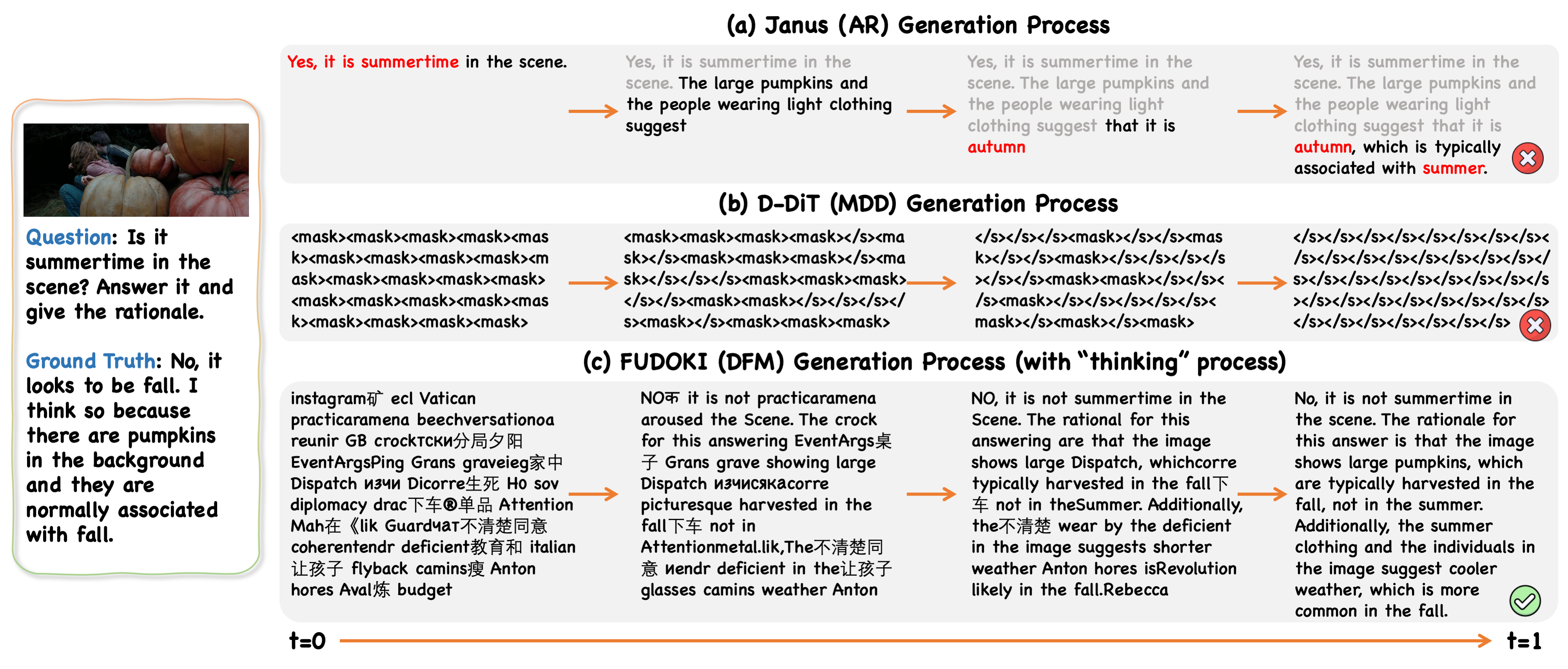

Generation process of different methods:

(a) AR-based Janus: Can only generate tokens sequentially; if an error is made in the initial step, subsequent outputs will consistently propagate this mistake.

(b) D-DiT (mask-based discrete diffusion, MDD): Cannot revise tokens once unmasked, making errors irreversible and leading to poor generalization.

(c) FUDOKI (discrete flow matching, DFM): Allows generated tokens to be revised in subsequent steps, enabling step-by-step reasoning and error correction for more accurate answers.

@article{wang2025fudokidiscreteflowbasedunified,

title={FUDOKI: Discrete Flow-based Unified Understanding and Generation via Kinetic-Optimal Velocities},

author={Jin Wang and Yao Lai and Aoxue Li and Shifeng Zhang and Jiacheng Sun and Ning Kang and Chengyue Wu and Zhenguo Li and Ping Luo},

year={2025},

eprint={2505.20147},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.20147}

}